Introduction :

Deploying machine learning models into production is a critical step in turning data-driven insights into practical applications. It involves transitioning from a development environment to a production environment, where the model can be accessed by end-users and integrated into existing systems. In this blog post, we will explore the key considerations and steps involved in deploying machine learning models, ensuring that they are scalable, reliable, and performant in real-world scenarios.

Steps Involved in Deploying Machine Learning Models :

Model Packaging and Serialization:

Before deploying a machine learning model, it needs to be properly packaged and serialized for easy transport and integration. This step involves saving the trained model parameters, preprocessing steps, and any dependencies required for inference. Common serialization formats include pickle, joblib, or formats specific to deep learning frameworks like TensorFlow's SavedModel or ONNX.

Containerization with Docker:

Containerization using Docker has become a popular choice for deploying machine learning models. Docker allows for the creation of lightweight, self-contained environments that encapsulate the model, its dependencies, and the necessary runtime environment. By using Docker, models can be easily shared and deployed across different platforms without worrying about compatibility issues.

Scalability and Performance:

Scalability and performance are crucial considerations when deploying machine learning models in production. Models need to handle a high volume of requests efficiently, ensuring low latency and responsiveness. Techniques such as model parallelism, asynchronous processing, and batch inference can be employed to optimize performance. Additionally, utilizing hardware accelerators like GPUs or TPUs can significantly enhance throughput and reduce inference time.

API Development and Documentation:

Creating a well-designed API is essential for the seamless integration and consumption of machine learning models. The API should have clear documentation, including details about the input data format, response structure, and any required authentication. Popular frameworks like Flask or FastAPI can be used to develop RESTful APIs, providing a standardized way to communicate with the deployed model.

Model Monitoring and Logging:

Monitoring the performance of deployed machine learning models is crucial for maintaining their reliability and detecting potential issues. Logging key metrics, such as inference latency, error rates, and resource utilization, can help identify performance bottlenecks or anomalies. Additionally, implementing health checks and alerts can ensure that any issues are promptly addressed, minimizing downtime and maximizing the model's availability.

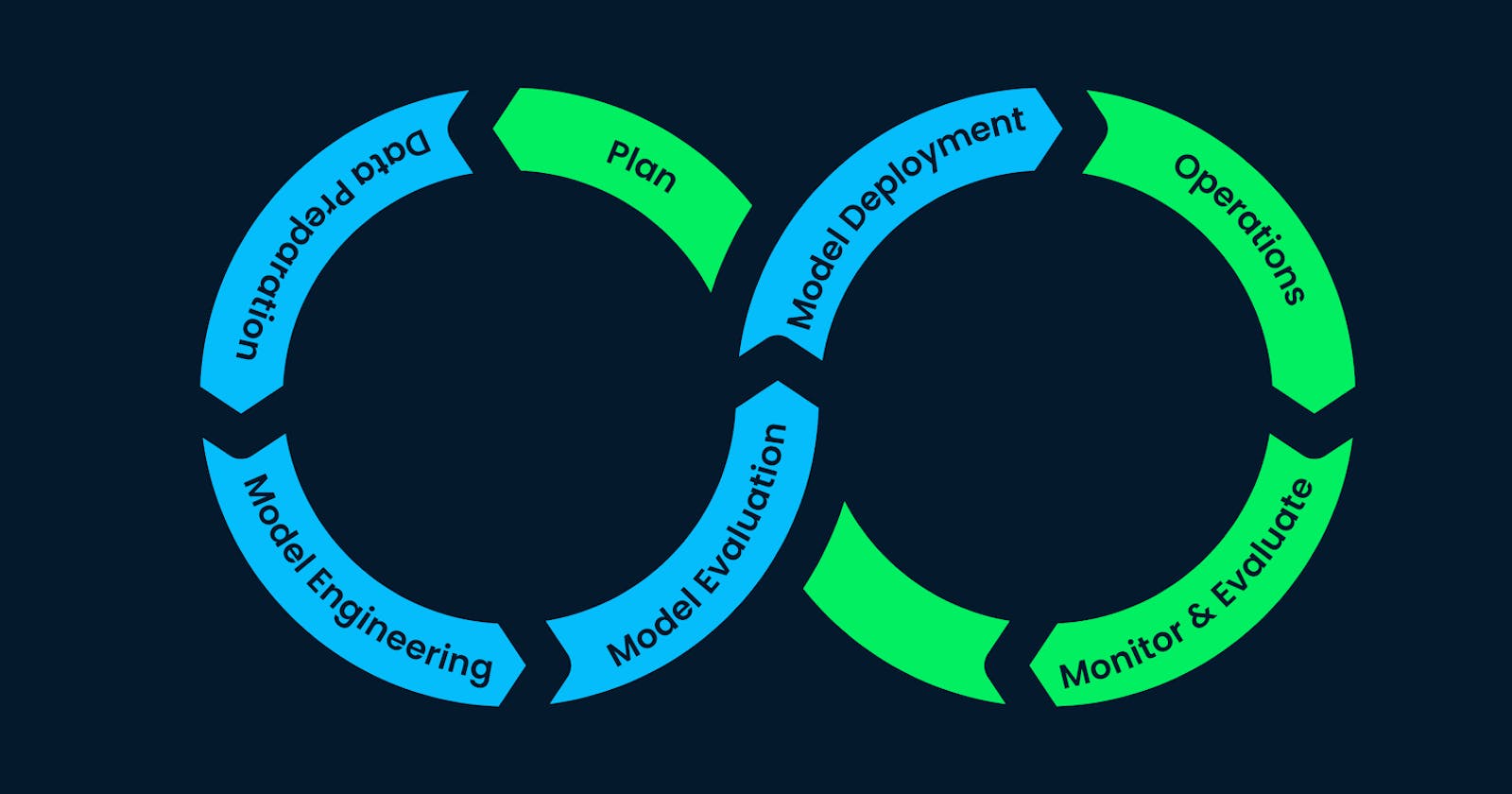

Continuous Integration and Deployment:

Implementing a continuous integration and deployment (CI/CD) pipeline for machine learning models enables automated testing, version control, and deployment to production environments. CI/CD pipelines ensure that changes to the model or the underlying codebase are thoroughly tested before being deployed, reducing the risk of introducing bugs or regressions.

Model Versioning and Rollbacks:

Maintaining proper version control of machine learning models is essential for reproducibility and easy rollback in case of issues. By versioning models, it becomes easier to track changes, compare performance across iterations, and revert to previous versions if necessary. This practice ensures that the deployed models are always the desired and tested versions.

Security and Privacy Considerations:

Machine learning models may handle sensitive data, making security and privacy crucial aspects of deployment. Implementing secure communication protocols, such as HTTPS, and applying appropriate access controls are necessary to protect both the model and the data it processes. Additionally, privacy regulations, such as GDPR or HIPAA, must be considered when deploying models that handle personal or sensitive information.

Conclusion :

Deploying machine learning models from development to production requires careful consideration of various factors, including model packaging, containerization, scalability, API development, monitoring, and security. By following best practices and leveraging tools like Docker, CI/CD pipelines, and monitoring systems, organizations can ensure that their machine-learning models are deployed effectively, providing accurate and reliable predictions in real-world scenarios. Additionally, staying updated with the latest advancements in deployment techniques and incorporating feedback from end-users can help optimize and improve the deployed models over time.

It's important to note that the deployment process may vary depending on the specific requirements of the project and the chosen technology stack. Organizations should adapt the steps and considerations mentioned in this blog post to their unique needs.

By successfully deploying machine learning models, organizations can unlock the potential of their data and bring the benefits of AI to their products and services. Whether it's integrating a recommendation system into an e-commerce platform, deploying a fraud detection model in a banking system, or embedding a language translation model in a communication tool, effective deployment ensures that the models are accessible, scalable, and deliver meaningful value to end-users.

In conclusion, deploying machine learning models is a crucial step in turning data-driven insights into actionable solutions. By considering aspects like model packaging, containerization, scalability, API development, monitoring, security, and privacy, organizations can ensure the smooth transition of models from development to production. With the right deployment strategies and continuous improvement efforts, machine learning models can have a profound impact on various industries, revolutionizing decision-making processes and enhancing user experiences.